...

Categories

Chart

Bar

Pie

Table

Sitemap

Swot Analysis

Area

Flowchart

Line

Map

Column

Organizational

Venn Diagram

Card

Wedding

Baby

Easter

Anniversary

Graduation

Greeting

Congratulations

Thank You

Valentines Day

Birthday

Halloween

Mothers Day

Thanksgiving

Fathers Day

Invitation

Business

New Year

Christmas

Halloween

Fathers Day

Baby Shower

Christening

Mothers Day

Thanksgiving

Engagement

Graduation

Party

Easter

Anniversary

Poster

Birthday

Music

Travel

Movie

Animal & Pet

Party

Motivational

Environmental Protection

Industrial

Fashion & Beauty

Kids

Non-profit

Exhibition

Law & Politics

Art & Entertainment

Sale

Holiday & Event

Business

Coronavirus

Sports & Fitness

Search: {{local.search_error_key}}

Search: {{local.search_subcategory}} in {{local.search_category}}

Sorry, no matches found. Please try again with different words or browse through our most popular templates.

Preview

Customize

Life Photo Exhibition

Preview

Customize

Art Classes

Preview

Customize

Education Class Art Center

Preview

Customize

Woman Photo Movie Poster

Preview

Customize

Blue High School Graduation Prom Poster

Preview

Customize

Simple Women Photography Exhibition Poster

Preview

Customize

Film Videotape and Popcorn Movie Night Poster

Preview

Customize

Artistic Graffiti Group Recruitment Poster

Preview

Customize

Rustic Brown Art Museum Poster

Preview

Customize

Poster Movie 1

Preview

Customize

Pacific Ocean Movie Poster

Preview

Customize

Dance Show

Preview

Customize

Couple Love Movie Poster

Preview

Customize

White and Orange Summer Movie Festival Poster

Preview

Customize

Traces of Time Exhibition Poster

Preview

Customize

Bulb Image Graphic Design Studio Poster

Preview

Customize

Black and Golden Theatre Poster

Preview

Customize

Art Exhibition

Preview

Customize

Painting Festival Ticket Booking Poster

Preview

Customize

Red Masks Play Poster

Preview

Customize

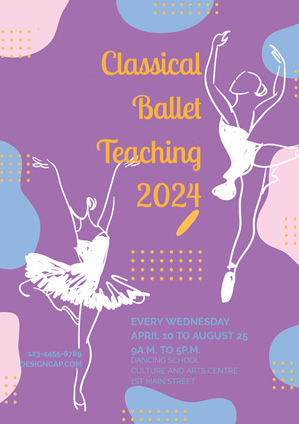

Education Class Ballet Teaching

Preview

Customize

Dance School

Preview

Customize

White Mask Ticket Information Opera Poster

Preview

Customize

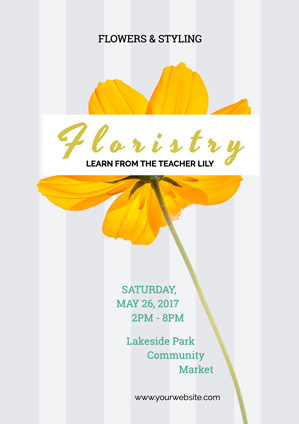

Education Class Floristry

Preview

Customize

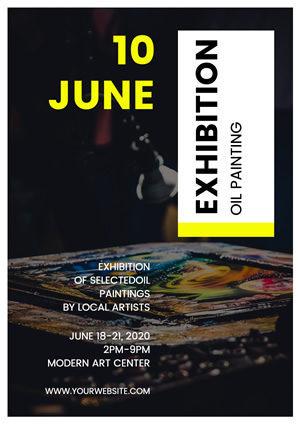

Artistic Oil Painting Exhibition Poster

Preview

Customize

Beautiful Oil Painting Exhibition Poster

Preview

Customize

Simple Black and White Award Poster

Preview

Customize

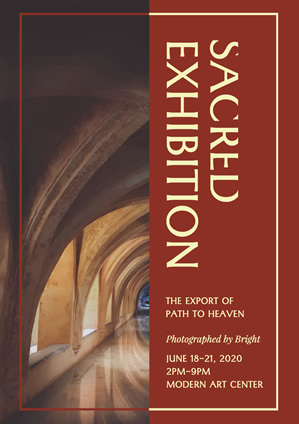

Red Architecture Exhibition Poster

Preview

Customize

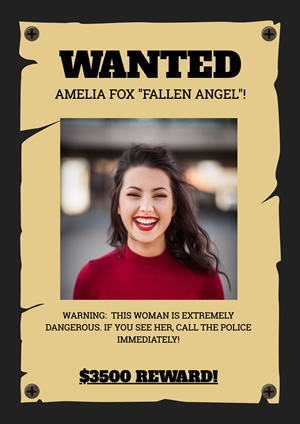

Framed Funny Photo Wanted Poster

Preview

Customize

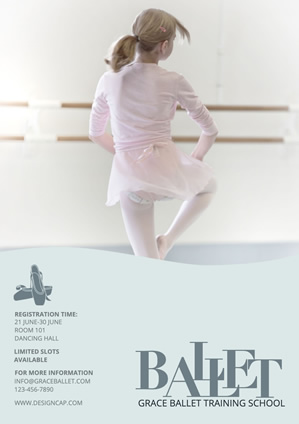

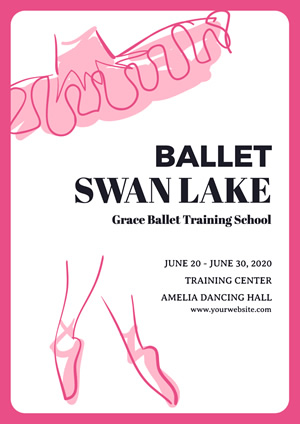

White and Pink Ballet Training Poster

Preview

Customize

Blue Movie Festival Poster

Preview

Customize

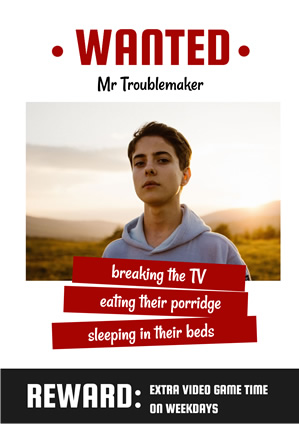

White Funny Photo Wanted Poster

Preview

Customize

Red Dance Center Promotional Poster

Preview

Customize

Black and White Portrait Photography Poster

Preview

Customize

Ticket Information Opera Poster

Preview

Customize

Funny Photo Wanted Poster

Preview

Customize

Poster Love

Preview

Customize

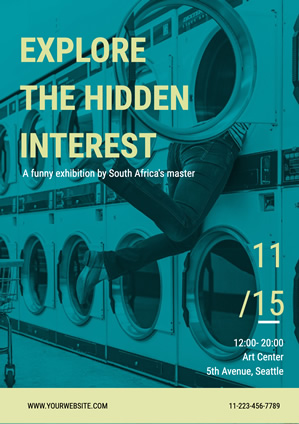

Funny Exhibition Poster

Preview

Customize

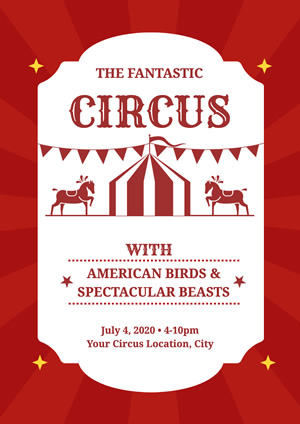

Red Fantastic Circus Poster

Cancel

Customize