取消

自定義

不容錯過的功能亮點

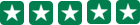

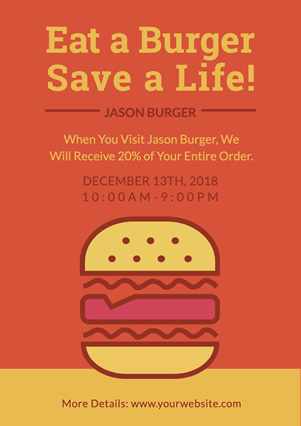

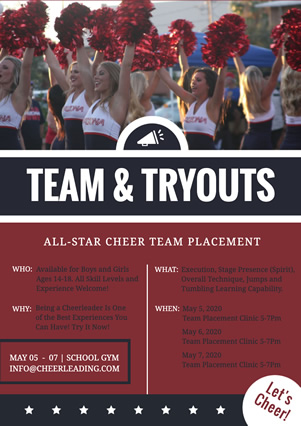

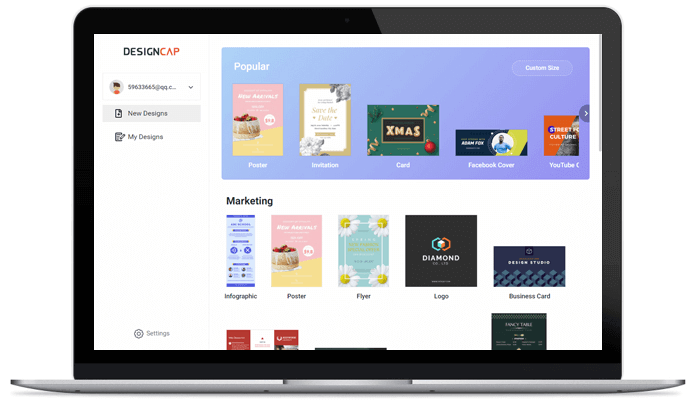

数百模板

在數百個精美的模板中獲取靈感,製作精美的傳單來推廣業務。

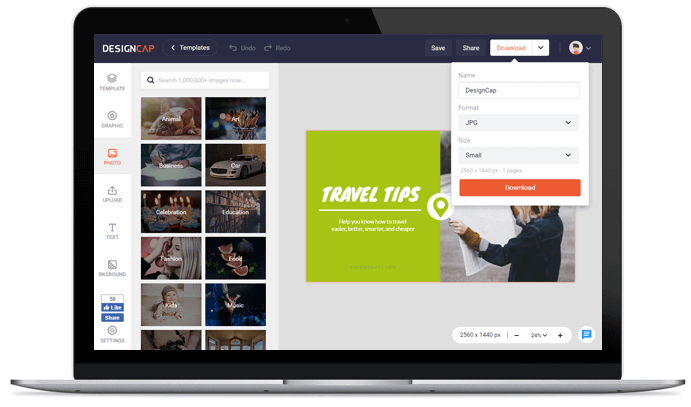

資源豐富

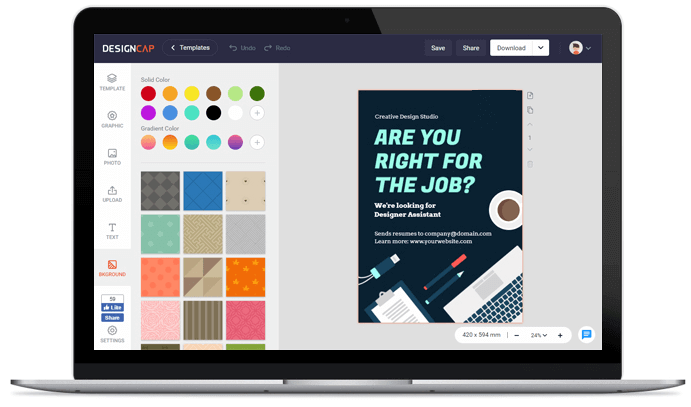

用海量圖片、插畫、形狀、字體以及其它資源修飾、完善你的傳單設計。

百種字體

上百種字體,讓你傳達信息的方式與眾不同。

完全自定義

使用大量強大的編輯工具,只需點擊幾下滑鼠,就能設計出專業的傳單。

如何在三步內完成一個傳單設計

1. 選擇範本

選擇一個模板開始你的傳單設計。

2. 自定義

使用簡單好用、功能強大的編輯工具來修飾你的傳單。

3. 輸出

將傳單保存至電腦或線上分享。

用戶評價

DesignCap 是一個可以簡單設計製作海報的線上工具有著各種不同類型、數百種的設計模版可依欲製作的海報主題類型選擇對應的模版, 不論是學生或是小本經營的店家都很適用。

DesignCap是一款平面設計好幫手,可以套用內建範本快速生成各種類型的海報,也能自行修改內容。

操作介面會看見很多樣版模組可以使用,文字等都可以做更換十分方便!功能支援夠大多使用者需求。