取消

自定义

为什么选择DesignCap简历制作软件

专业设计的简历模板

DesignCap为你提供了大量的适合各类工作的简历模板。无论你想要应聘什么职业,都能在这里找到灵感。

简单好用的文本编辑器

DesignCap为你提供了大量的文本编辑功能。你可以轻松改变字体、字号、颜色、间距和对齐方式。

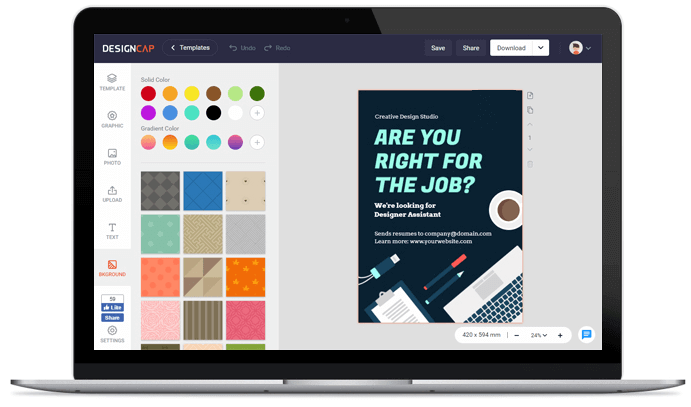

强大的图片编辑器

如果想要在简历中添加图片,只需点击并上传图片,然后对图片进行编辑,如修剪图片、添加滤镜、添加图片边框等。

简单快捷

使用DesignCap制作简历可以节省许多时间。即使你是第一次做简历,也能毫不费力地完成设计。

制作简历三步曲

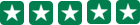

1. 选择模板

选择一个喜欢的简历模板。

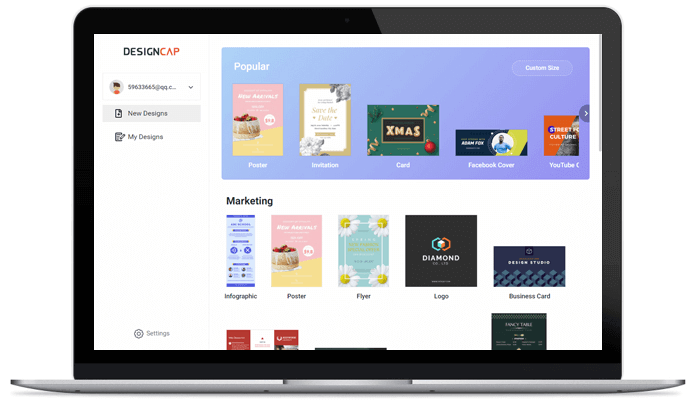

2. 自定义

添加你的联系方式、工作经历、教育背景等等。

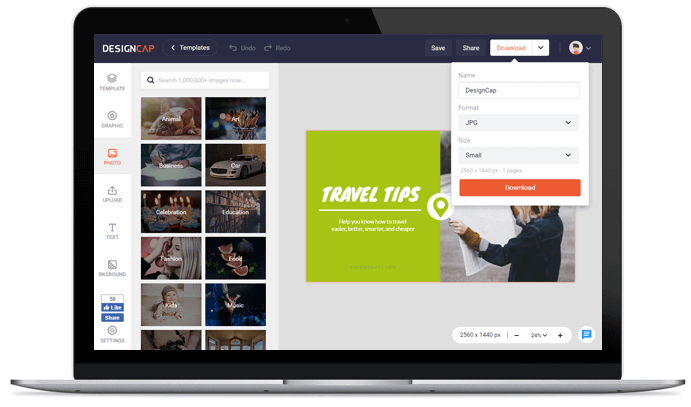

3. 输出

下载简历,然后发送给其他人或直接打印出来。

用户评价

简单而实用的网站,它可以在线制作心仪的海报或传单,通过模板可以快速创建简约、美观的作品,并可以下载印刷级清晰度的图片文件!

DesignCap是一个在线制作海报的网站,可以使用模版(有多种场景模版贡供选择),然后“傻瓜式”操作替换文字部分即可,也支持你二次排版设计。

DesignCap提供了大量模板,能帮助用户可以轻松完成海报,贺卡,Facebook封面,YouTube横幅等各种极具个性化的设计。